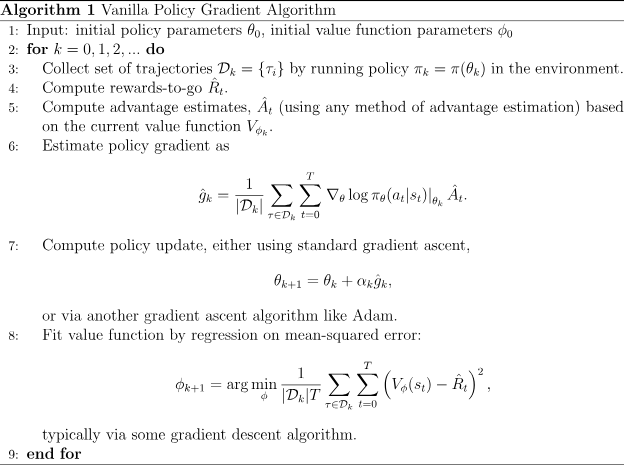

Vanilla Policy Gradient (VPG)

Resources:

- https://spinningup.openai.com/en/latest/algorithms/vpg.html

- Implementation here

Why is there a value function, if we know how to optimize the policy directly?

Add a value network, which estimates expected returns, isn’t required but improves learning in two main ways:

- Variance Reduction: It helps to decrease the variability in policy updates, making learning more stable.

- Better Decision Making: It allows more informed decisions, balancing exploration and exploitation better.

Refer to Generalized Advantage Estimation, if you just used the return of the trajectory, that would be a valid formulation too, but empirically slower?

Spent a little time trying to understand the implementation. The buffer is crucial to get right it seems:

- At the end of very iteration, the replay buffer is reset

- All the other policy gradient methods follow this

VPG is just behavior cloning multiplied by advantage!

I learned VPG after learning about BC! And I was like these equations are awfully similar.

More implementation Details

Looking at implementation here and taking some notes.

Adam Optimizer used:

# Set up optimizers for policy and value function

pi_optimizer = Adam(ac.pi.parameters(), lr=pi_lr)

vf_optimizer = Adam(ac.v.parameters(), lr=vf_lr)Something that is interesting here is that they use an MLP to learn (mean), but for variance , they use a single parameter that is state independent. When you get to SAC, you will see that this is not the case

class MLPGaussianActor(Actor):

def __init__(self, obs_dim, act_dim, hidden_sizes, activation):

super().__init__()

log_std = -0.5 * np.ones(act_dim, dtype=np.float32)

self.log_std = torch.nn.Parameter(torch.as_tensor(log_std))

self.mu_net = mlp([obs_dim] + list(hidden_sizes) + [act_dim], activation)