Counterfactual Regret Minimization (CFR)

https://nn.labml.ai/cfr/index.html

CFR is an extension of Regret Matching for extensive-form games. We use CFR to solve Imperfect Information games, i.e. cases where the game is only partially observable, such as Poker.

This is a really good paper to introduce your to CFR, and a tutorial here.

Link to original paper:

- http://poker.cs.ualberta.ca/publications/NIPS07-cfr.pdf

- https://papers.nips.cc/paper/2007/file/08d98638c6fcd194a4b1e6992063e944-Paper.pdf

This is good blog:

- https://int8.io/counterfactual-regret-minimization-for-poker-ai/

- You should look at MCCFR http://mlanctot.info/files/papers/nips09mccfr.pdf

- They explain CFR in there. I think you should understand the theory behind the regret bounds, even if it is going to take a bit of time. Because I don’t want to be a scam. I want to truly understand what I am doing

So I have good understanding of formalization, but...

I am not really well-versed on the proof. I have an intuition that nash equilibrium means that deviating from the strategy works. But like how the idea that using regret shows that CFR guarantees, I need to be familiar with the math.

Families of CFR

- Monte-Carlo CFR

- CFR-D (CFR-decomposition) (2014)

- allows “sub-linear space costs at the cost of increased computation time”

- CFR+ (2015) used to solve Heads Up Limit Hold’Em

- all regrets are constrained to be non-negative

- Final strategy used is the current strategy at that time (not the average strategy)

- no sampling is used

- Discounted CFR(2018)

- Instant CFR (2019)

- Deep CFR (2020?)

Connection between Regret, average strategies, and Nash Equilibrium

In a zero-sum game, if , then is a equilibrium.

Two papers have been used to produce the article below:

- Regret Minimization in Games with Incomplete Information: The original paper that came up with the counterfactual regret minimization algorithm.

- Monte Carlo Sampling for Regret Minimization in Extensive Games: introduces Monte Carlo Counterfactual Regret Minimization (MCCFR), where we sample from the game tree and estimate the regrets.

The notation written below is entirely taken from the papers above. If you want the original source of truth to produce this article, refer to the original papers. The goal of this article is to produce a more user-friendly introduction, as well as for my own self-learning.

Credits to LabML’s annotated CFR for the original idea to host a this style of notebook. Contents are very similar.

See Poker AI Blog, where we first talk about RPS and regret matching. I think it is helpful to go through that first, but not necessary.

#todo Check-out Lilian Weng’s blog, and Andrej Karpathy’s blog, and see how much notation they use.

Extensive-Form Game with Imperfect Information

Before we dive into the CFR algorithm, we need to first formally the game we are trying to solve. A basic understanding of notation behind Functions, Relations and Sets is needed to grasp the notation below (if rusty, check out a quick cheatsheet here).

No-Limit Texas Hold’Em Poker is a finite extensive game with imperfect information:

- Finite because we can guarantee that the game will terminate

- Extensive because there are multiple decisions made in a single game, unlike Rock Paper Scissors, which is called a normal-form game

- Imperfect Information because each player can only make partial observations, i.e they cannot see the other player’s cards

A finite extensive game with imperfect information has the following components):

- A finite set of Players

- A finite set of Histories

- A Player Function

- A Chance Function

- Information Sets

- Utility Function

Player

An imperfect information extensive game (IIEG) has a finite set of players.

- We denote a particular player by , where

- For Heads-Up Poker, there are only two players, so

History

An IIEG has finite set of possible histories of actions.

- Each history denotes a sequence of legal actions

- is the set of terminal histories, , i.e. when we have reached the end of a game

- is the set of actions available after a non-terminal history #todo you have both and which is confusing

Player Function

A player function assigns each nonterminal history to an element of , which tells you whose turn it is

- When , the function returns the player who has to take an action after non-terminal history

- When , then chance determines the action taken after history

- This is when for example, it is time to draw a new card. It is not up to the player to decide

Chance Function

- A function that assigns a probability measure for all , for each history where . In Poker, this simply assigns an equal probability for each card of the remaining deck that gets drawn.

Information Set

- An information partition of for each player , where A(h’)hh’$ are members of the same partition

- information partition is confusing, what is this??

- Each information set for player contains a set of histories that look identical to player

- Note that we will often just write instead of

Utility

A utility function for each player

- The function returns how much player wins/loses at the end of a game given a terminal history

- is the range of utilities to player

- when , and , we have a zero-sum extensive game (such as in the case of Heads-Up Poker)

Some Clarifications:

- when you are initially dealt two cards, that is part of the history. Then, your opponent gets dealt two cards. However, you won’t be able to see that information about the history.

Strategy and Equilibria

Now that we have formally defined the notation for the extensive game, let us define strategies to play this game:

Strategy

- A strategy of player is a function that assigns a distribution over for each

- As player , we follow our strategy to decide which action to pick throughout the game

- represents the probability of choosing action given information set for player

- Two types of strategies:

- Pure strategy (deterministic): Chooses a single action with probability 1.

- Mixed strategy (): At least two actions played with positive probability

- is the set of all strategies for player

Parallel: If you’ve dabbled/are an expert in reinforcement learning, strategy corresponds to the policy that an agent follows, where represents the probability of choosing action given that the agent is in state .

However, in Game Theory, we use to denote reach probability (see more below).

Strategy Profile

- A strategy profile consists of a strategy for each player

- refers to all the strategies in strategy profile excluding

Reach Probability of History

This is a really crucial idea that one must understand before moving on.

- is the reach probability of history (probability of occurring) if all players choose to play according to strategy

In other words, it’s the probability of each player multiplied together. But shouldn’t it be the contribution from both players? So draw out Rock papers scissors and the probabilities. Example: , , where the actions are If each player plays RPS with 1/3 probability, then Player 2 doesn’t play at this point, so how do you compute ?

- Well, the probability is just 1

so its actually Let’s decompose

So say it’s Rock Rock. From the perspective We actually only know like what

This is telling the probability that you are to end up in history .

The probability of reach an information set under strategy profile given by

- Notice that the above is a sum, and not a product, because multiple histories can be the same InfoSet, so we increase our chances of encountering a particular information set by summing the probabilities.

We also define , the reach probability of a terminal history given the current history under strategy profile :

Expected Payoff

The utility function we covered gives us some payoff for player at terminal history . How can we measure our average payoff as we play the game more and more? That is the idea of an expected payoff.

The expected payoff for player is given by

This is simply a single value that tells you how good your strategy profile . That is, if you play according to , how much money do you expect to win or lose? our goal is to reach a Nash Equilibrium, so .

- Intuitively, we are simply taking a weighted average, multiplying our payoff of each terminal history by how likely we are to reach that history using strategy profile , given by . Note that .

- The expected payoff is different depending on what strategy profile

We will also sometimes use the notation , which is the expected payoff for player if all players play according to the strategy profile .

- We use this expanded form because we will also add a superscript in \sigma^\textcolor{orange}{t}_i, so you can obtain the utility at two different times, for example

We can also talk about expected payoff at a particular nonterminal history (this is also sometimes referred to as just expected value at a particular history ) We also call the above counterfactual value at nonterminal history , but instead of histories, we are interested in getting the value for an information set .

- I am quite confused by why this equation is the way it is, so you should see below for counterfactual value.

Serendipity: This is quite similar to the value functions we see in Reinforcement Learning, where we do a Monte-Carlo rollout to sample the rewards.

Some more notes about discrepancies

Note: Notice that we were defining the domain of utility function as the set of terminal histories , but now we define it as a strategy profile.#todo Explain discrepancy.

Nash Equilibrium

Our goal is to find the Nash Equilibrium. This is a strategy profile in which no players can improve by deviating from their strategies.

Formally, in a two-player extensive game, a Nash Equilibrium is a strategy profile where the two inequalities are satisfied:

- For instance, the first equation states for take any strategy for player in the set of all strategies for player , the expected payoff for player in this strategy profile cannot improve

An approximation of a Nash Equilibrium, called -Nash Equilibrium, is a strategy profile where

Best Response

Given a strategy profile , we define a player ’s best response as

In other words, player ’s best response is a strategy that maximizes their expected payoff assuming all other players play according to .

Exploitability

Exploitability is a measure of how close is to an equilibrium

This will be important to measure how close the strategy profile we generate is to the Nash Equilibrium.

Regret

As we will come to understand shortly, CFR is based on the very powerful yet simple idea of minimizing regret over time. Let us first understand what regret is about.

Regret is a measure of how much one regrets not having chosen an action. It is the difference between the utility/reward of that action and the action we actually chose, with respect to the fixed choices of other players.

The overall average regret of player at time is

- where is the strategy used by player on round

- is utility of a strategy profile with and

- Think of as the “optimal” strategy profile, just like Optimal Value Function in RL

Notice, however, that we need to find which maximizes this difference in utilities. This is the difficulty… we don’t know this unless we loop over all possible strategies, which is computationally intractable. CFR will comes up with a solution to represent a tractable regret.

- Reinforcement Learning has a similar idea when trying to find the Optimal Policy

We also define the average strategy for player from time to . This is the final strategy would be used. For each information set , for each , we define

So for a given player , we want a regret minimizing algorithm such that as .

Connection between Regret, Average Strategy and Nash Equilibrium

There is this connection between regret , average strategies , and Nash Equilibrium, which is extremely important and going to help us unlock the power of CFR!

Theorem 1: In a zero-sum game, if , then is a equilibrium.

#todo Try to prove this. You can do it

Counterfactual Regret Minimization

So the question is, how can we figure out this set of strategies such that regret is minimized? The key idea is to decompose this overall regret into a set of additive regret terms, which can be minimized independently. These individual regret terms are called counterfactual regret, and defined on an individual information set .

Counterfactual?

In game theory, a counterfactual is a hypothetical scenario that considers what would have happened if a player had made a different decision at some point in the game. In CFR, we consider these hypothetical situations.

We are now finally getting into the heart of the counterfactual regret minimization (CFR) algorithm.

Let be an information set of player , and let be the subset of all terminal histories where a prefix of the history is in the information set . for let be that prefix.

- So basically is converting the information set to the history version where we can see both players actions.

We first define the counterfactual value as

- is the reach probability of history

- is the payoff for player at terminal history

- Remember that we can only compute the utility for terminal histories, therefore must be a terminal history

This is pretty much the same idea as [[notes/Counterfactual Regret Minimization#Expected Payoff|Expected Payoff]] which we just talked about above. Think of this as the Expected Value of a particular information set . The greater is, the greater we expect the money we make at this information set.

- Similar to the idea of Value Function for RL

We use counterfactual values to update counterfactual regrets.

The immediate counterfactual regret is given by

where

- In other words, the immediate counterfactual regret is the maximum immediate counterfactual regret out of all possible actions

- is the strategy profile identical to , except that player always chooses action at information set

The reason we are interested in the immediate counterfactual regret is because of this key insight that is going to be powering CFR.

Theorem 2:

- Note that

This tells us that the overall regret is bounded by the sum of counterfactual regret. Thus, if we want to minimize our overall regret , we can simply minimize our cumulative immediate counterfactual regret. And because of theorem 1, we know that minimizing allows us to converge to a -Nash Equilibrium!

We now have all the pieces of the puzzle. How can we update our strategy profile such that the cumulative counterfactual regret is minimized over time?

We use the following update rule which applies Blackwell’s Approachability Theorem to regret (called Regret Matching):

- where

- In other words, actions are selected in proportion to the amount of positive counterfactual regret for not playing that action. If no actions have any positive counterfactual regret, then the action is selected randomly.

- Since we cannot calculate , we use in practice

If a player selects uses a strategy according to the equation above, then we can guarantee the following.

Theorem 3:

Intuition

Okay, I’ve explained a lot of the above, but then how this translates in code is not super intuitive. The pseudocode I referenced was from a CFR tutorial, the original paper does not have anything like that.

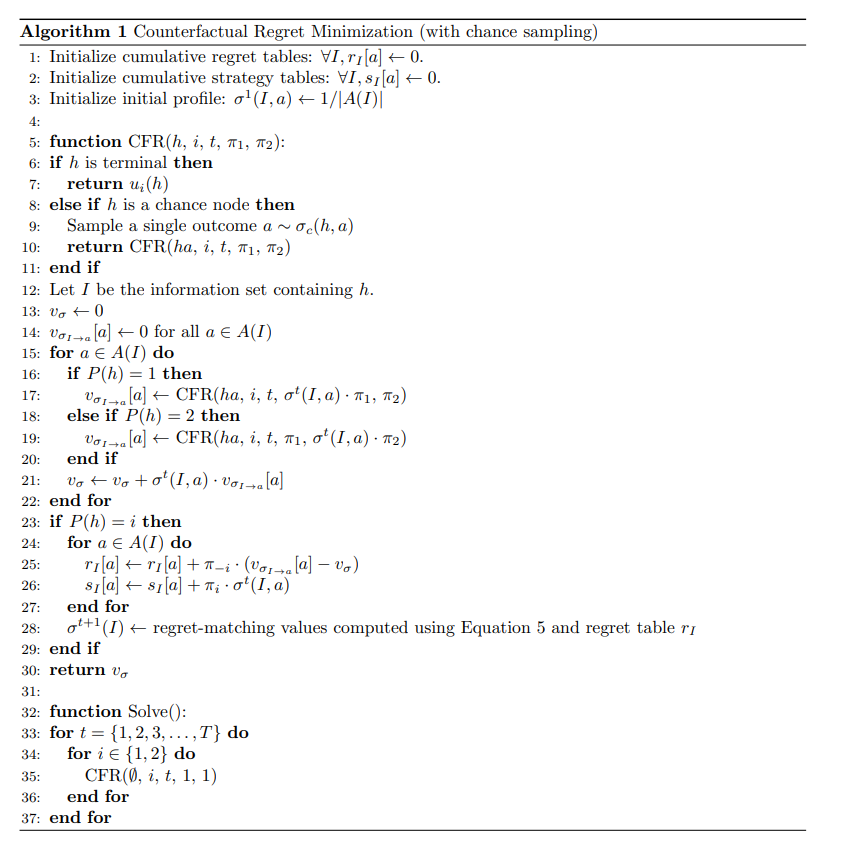

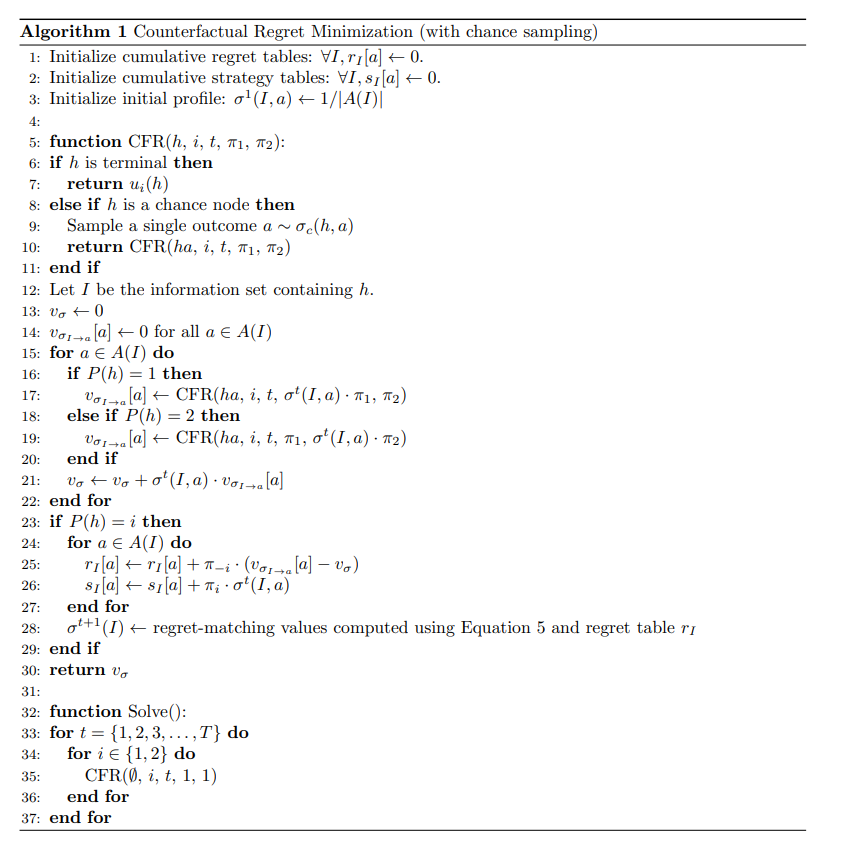

Pseudocode for vanilla CFR.

Pseudocode for vanilla CFR.

Essentially, you initialize your strategy profile to a random uniform probability of choosing an action for each Information Set.

Then, you can start iterating the algorithm, updating the counterfactual value by self-play, the value updates are propagated through the terminal histories, and weighted by this reach probability (determined by our current strategy).

The strategy profile is then selected proportional the regret using Regret Matching.

Monte-Carlo CFR

With Vanilla CFR, each iteration requires us to traverse an entire game tree since we update for every single information set and every action on each iteration.

However, this is simply too slow with large games such as No-Limit Heads Up Texas Hold’Em Poker. MCCFR can help the speedup convergence to an approximate Nash Equilibrium.

With MCCFR, we avoid traversing the entire game tree on each iteration while still having the immediate counterfactual regrets be unchanged in expectation. We do this by restricting the terminal histories that we consider on each iteration.

Let be a set of subsets of terminal histories (i.e. ), such that their union spans the set (i.e. (.

Each of these subsets is called a block .

On each iteration, we will sample one of these blocks and only consider that block. Let be the probability of considering for the current iterations (where ).

The probability of considering terminal history on the current iteration is given by Then, the sampled counterfactual value when updating block is

The paper proves that the expectations of the sampled counterfactual value is equal to the counterfactual value

So in the immediate counterfactual regret, we can use the following

Note to self

I am still having trouble going from the math equations to a full on algorithm that does this.

There is also a speedup to this that I am not aware about.

Old notes

Explanation of notations:

- is a strategy profile which consists of a strategy for each player

- is the strategy for player at time (assigns probability distributions over )

- is the set of all game actions

- is an Information Set

- The is the information that a player can see. Distinction between State and Set

- is the set of legal actions for information set

- History is the sequence of actions from the root of the game

- is the reach probability of history if all players choose to play according to strategy

- This is where “chance sampling” comes into play, because we consider reach probabilities to more efficiently train our algorithm

More notation:

-

denotes the set of all terminal game histories (sequences from root to leaf)

-

is the utility of player at terminal history

-

The counterfactual value at non-terminal history is

We use counterfactual values to update counterfactual regrets.

The counterfactual regret of not acting action at history is

- where is the profile except that at , action is always taken

The counterfactual regret of not taking action at information set is just the sum of histories, so

- You know the counterfactual values since this is a self-play algorithm

- is the regret of player at time for not taking action at information set (measures how much player would rather play action at information set )

- The cumulative counterfactual regret is

We use the cumulative counterfactual regret to update the current strategy profile via Regret Matching:

- where

In Games of larger state spaces → For more complex games, where there are quintillions of information sets, this is too large to iterate over for convergence of mixed strategies.

The key idea is that we can approximate information sets, by using imperfect recall. See Game Abstraction

Theoretical Analysis (Regret Bounds)

Notes for me explaining the poker AI

My thoughts: CFR finds the best response to the opponent’s strategy. Over time, this converges to the Nash Equilibrium.