Object Detection

- Object detection works well with an online connection, but it doesn’t work deployed because you still need connection

- PointPillars doesn’t work yet (yes, because it still needs to get merged)

Object detection is the field of Computer Vision that deals with the localization (by drawing bounding boxes) and classification of objects contained in an image/video.

Some challenges:

- Objects are not fully observed, either because of occlusion or truncation (out of bounds of the camera)

- Scale (various scales of images still need to be recognized)

- Illumination changes (recognize even when it’s very bright or very dark)

Using Anchor Boxes

Object Detection occlusion should be:

From the article

https://www.v7labs.com/blog/object-detection-guide

You don’t always want to use an Object Detection algorithm. Semantic Segmentation / Classification might be a better alternative sometime.

- Objects that are elongated—Use Instance Segmentation.

- Objects that have no physical presence—Use classification

- Objects that have no clear boundaries at different angles—Use semantic segmentation

- Objects that are often occluded—Use Instance Segmentation if possible

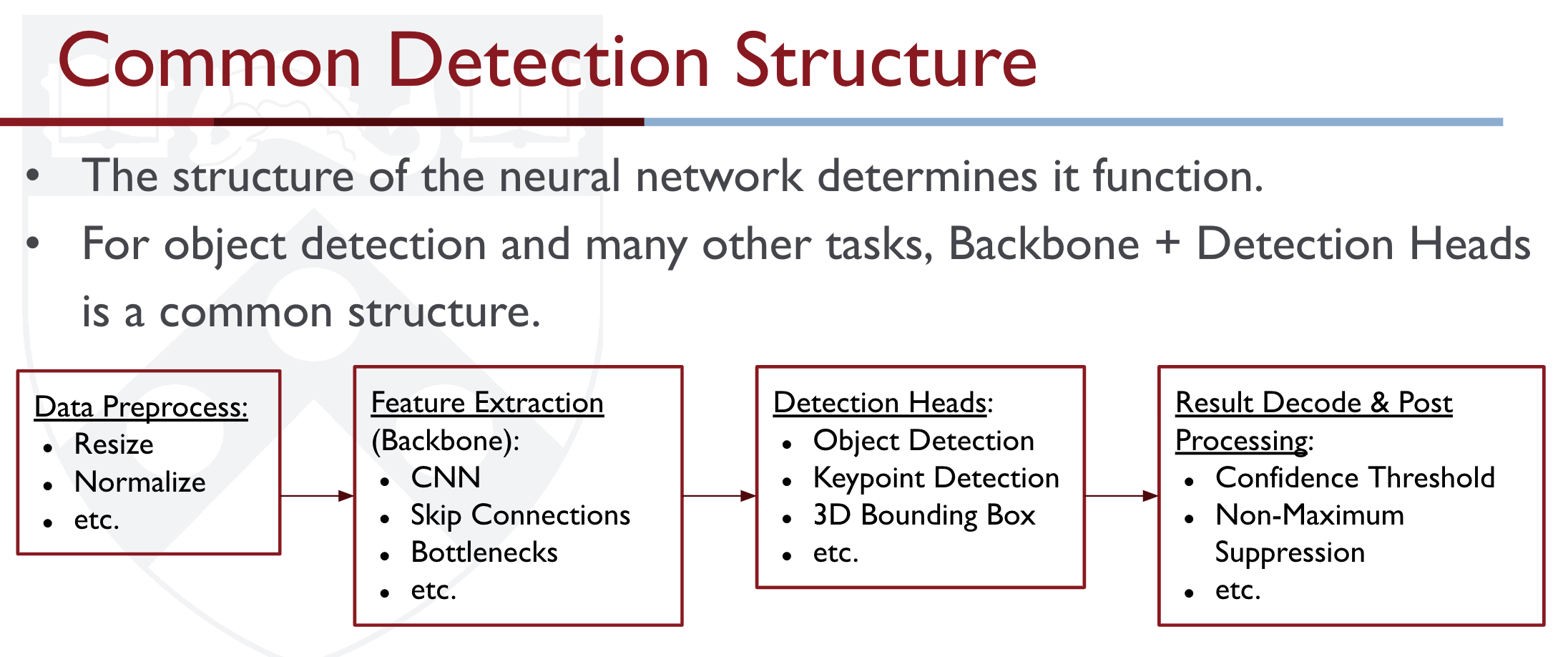

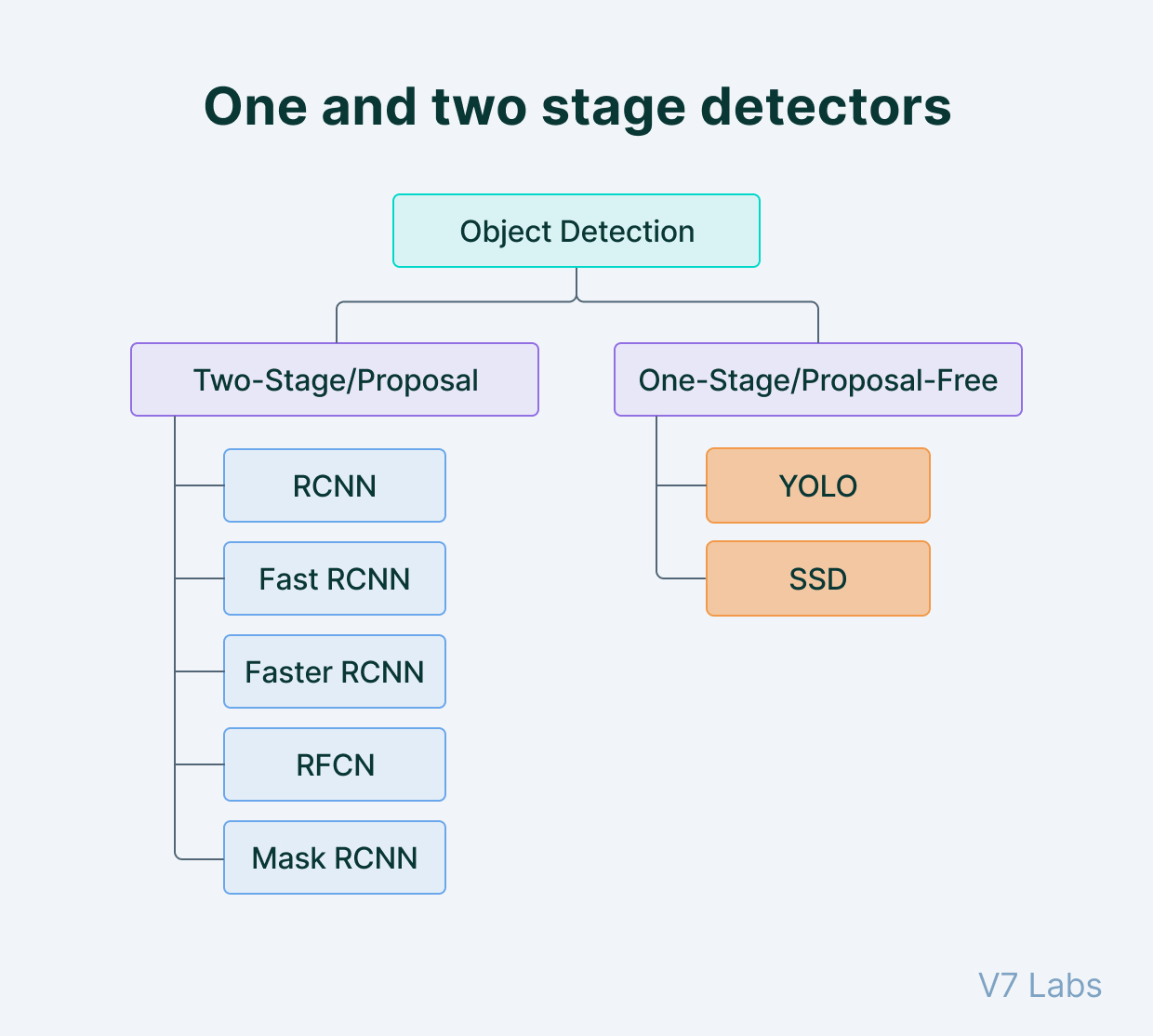

Two types of object detectors:

- Single-stage object detectors (end-to-end)

- Two-stage detectors

- stage 1: extract RoI (Region of interest),

- stage 2: classify and regress the RoIs

So which one is better, single stage or multistage? The paper “Focal Loss for Dense Object Detection” (you have it on Zotero) explains why one performs better than the other.

Feature extractor

Feature extractors are the most computationally expensive component of the 2D object detector. Most common extractors:

- VGG

- Resnet

- Inception

Computer Vision (Camera)

- SSD

- YOLO

- Know the differences with v2, v3, v4, etc.

- DINO → Super cool unsupervised method

- CenterNet https://github.com/xingyizhou/CenterNet

Lidar

Approaches

- Sliding Window (Exhaustive Search)

- At its core, an object detection algorithm is just an object recognition algorithm. The most straightforward approach is to generate sub-regions (patches) and apply object recognition. We thus exhaustively search for objects over the entire image ( earch different locations + different scales).

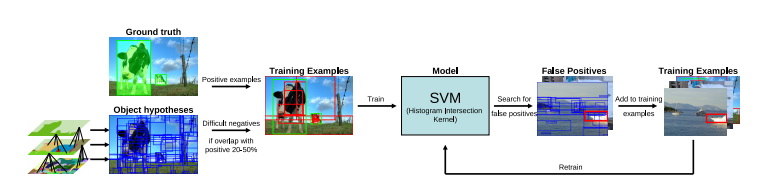

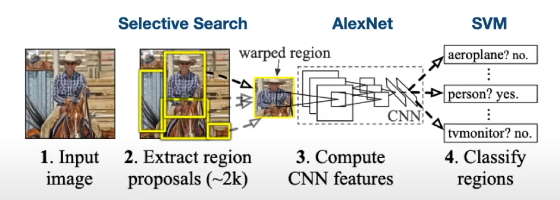

- Region Proposal (Selective Search) → R-CNN in 2013 Region proposal algorithms identify prospective objects in an image using Semantic Segmentation. Selective Search is a region proposal algorithm used in object detection. It is designed to be fast with a very high recall. It is based on computing hierarchical grouping of similar regions based on color, texture, size and shape compatibility.

Steps:

- Generate initial sub-segmentation of input image using the method describe by Felzenszwalb et al (See Semantic Segmentation)

- Recursively combine the smaller similar regions into larger ones. We use a Greedy algorithm:

- From set of regions, choose two that are most similar.

- Combine them into a single, larger region.

- Repeat the above steps for multiple iterations.

- Use the segmented region proposals to generate candidate object locations.

Afterwards, we can feed the data into a SVM classifier.

Region Proposal, Key Disadvantages:

- 3 independently trained components (not end-to-end)

- Slow!

Alternatives started to emerge: Fast(er) R-CNN, YOLO and SSD.

SSD works by using a more concise exhaustive search and adjusting bboxes over time. It eliminates bbox proposal.

Resources

title: Object Recognition vs. Object Detection (Harder)

Object recognition (i.e. Image Classification) identifies which objects are present in an image. It only and produces class labels with probabilities. Ex: Dog (97%).

**Object Classification with Localization** only works with localizing one object.

- This is done just like how we usually do [[Image Classification]], you just add four extra parameters to train on the bounding box as well. You just have multi-task loss.

- Actually, Human Pose Estimation can be done like this. And that's why Mediapipe only works with one pose. Because it treats it as a Object Classification with Localization problem??

On the other hand, object detection also outputs bounding boxes (x, y, width, height) to indicate the locations of the detected objects (there can be multiple objects)

Object detection comes down to drawing bounding boxes around detected objects which allow us to locate them in a given scene.Andrew Ng Object Localization

if you only need to localize a single object with a bounding box, you can define the target y label as

Just like a Image Classification problem, we output this vector of y, where we add these 4 bbox values, almost like a linear regression problem.

- is 0 or 1, 1 meaning there is an object, probability that there is an object

- is the class i

However, for object detection, you can start with trying sliding windows detection. See above.