CS451: Data-Intensive Distributed Computing

https://student.cs.uwaterloo.ca/~cs451/syllabus.html

For Steven: You’re probably looking for Latency numbers every programmer should know.

Really great teacher. Has gone downhill as the term progressed. Became boring. Though slides are pretty good.

Things to master for final:

- LSH-hashing

- DStream??

- Syntax for Spark (things like reducebykey, tf does that do?)

- Bigram frequency, boolean retrieval, page rank, how the logic is actually implemented?? cuz i forgot

Concepts

A3:

- Need to get an more efficient reducer? Like doing (A,B): C instead of A: (B,C)?

- But aggregating the counts seems like a pain. It’s like doing pairs instead of stripes

Running the reference checks

hadoop jar target/assignments-1.0.jar ca.uwaterloo.cs451.a3.BuildInvertedIndex \

-input data/Shakespeare.txt -output cs451-s36gong-a3-index-shakespeare

Query

hadoop jar target/assignments-1.0.jar ca.uwaterloo.cs451.a3.BooleanRetrieval \

-index cs451-s36gong-a3-index-shakespeare -collection data/Shakespeare.txt \

-query "outrageous fortune AND"

hadoop jar target/assignments-1.0.jar ca.uwaterloo.cs451.a3.BooleanRetrieval \

-index cs451-s36gong-a3-index-shakespeare -collection data/Shakespeare.txt \

-query "white red OR rose AND pluck AND"

First output

Query: outrageous fortune AND

1073319 The slings and arrows of outrageous fortune

Second output

Query: white red OR rose AND pluck AND

1713274 From off this brier pluck a white rose with me.

1713428 Pluck a red rose from off this thorn with me.

1713566 I pluck this white rose with Plantagenet.

1713612 SUFFOLK. I pluck this red rose with young Somerset,

1714623 In sign whereof I pluck a white rose too.

Chapter 2 and 3

Those notes are in MapReduce, RDD and Spark.

Chapter 4: Dealing with Text

The goal is to generate text. We can use a probabilistic model.

P(“I saw a van”) = P(“I”) x P(“saw” | “I”) x P(“a” | “I saw”) x P(“van” | “I saw a”)

But how feasible is this?

- Not really. We use a smaller limit: the n-gram

- Limit of words

Basic Idea: Probability of next word only depends on the previous (N – 1) words

- N = 1 : Unigram Model- P(w1 ,w2 ,w3 ,…) = P(w1 ) P(w2 ) … P(wk )

- N = 2 : Bigram Model -

State of the art rarely goes above 5-gram.

We apply laplace smoothing for the bigram probabilities.

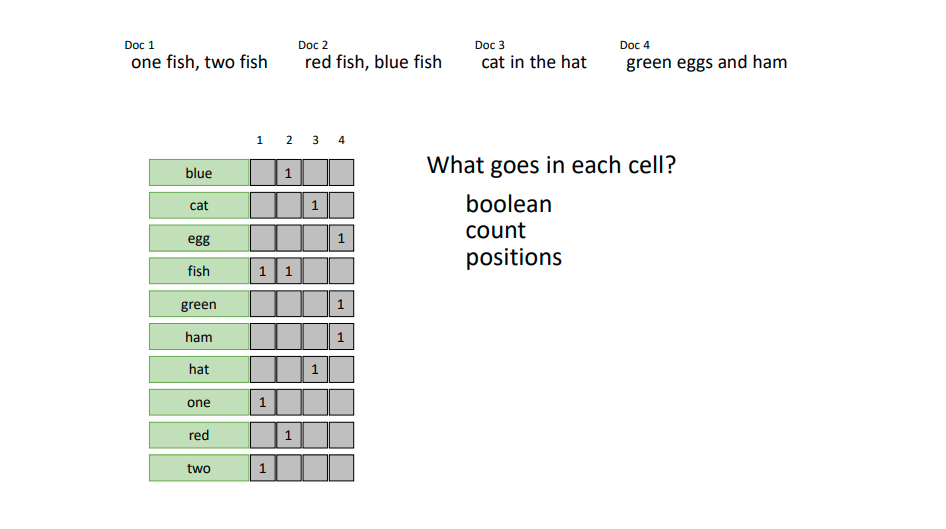

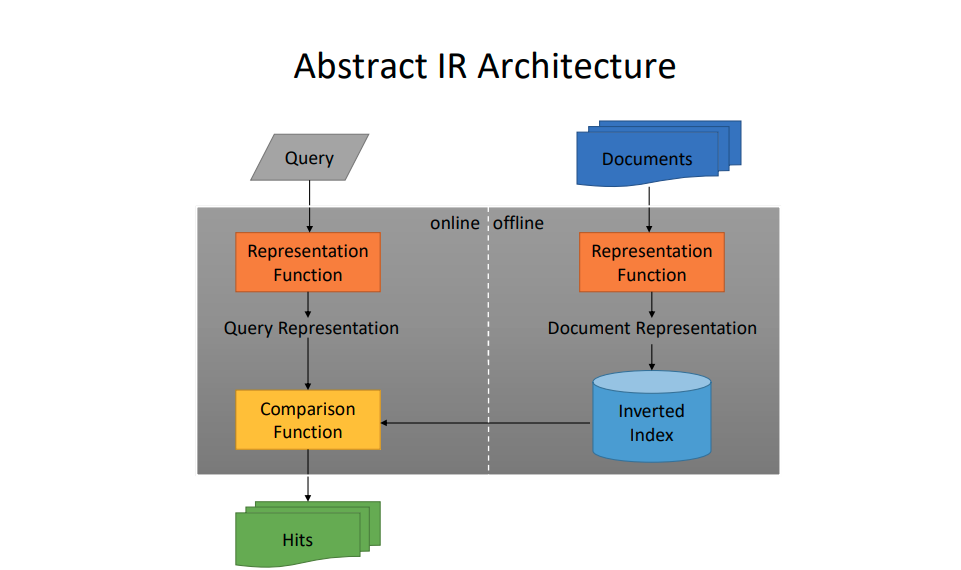

A posting consists of a record that associates a specific word (or term) with a set of documents in which that word appears.

Terminology:

- Inverted Index. Maps context to documents.

- Forward Index. Maps documents to context. (Seems strange to me, so a book’s index is “inverted”?)

- so the word is simply a mapped to in which document it is in

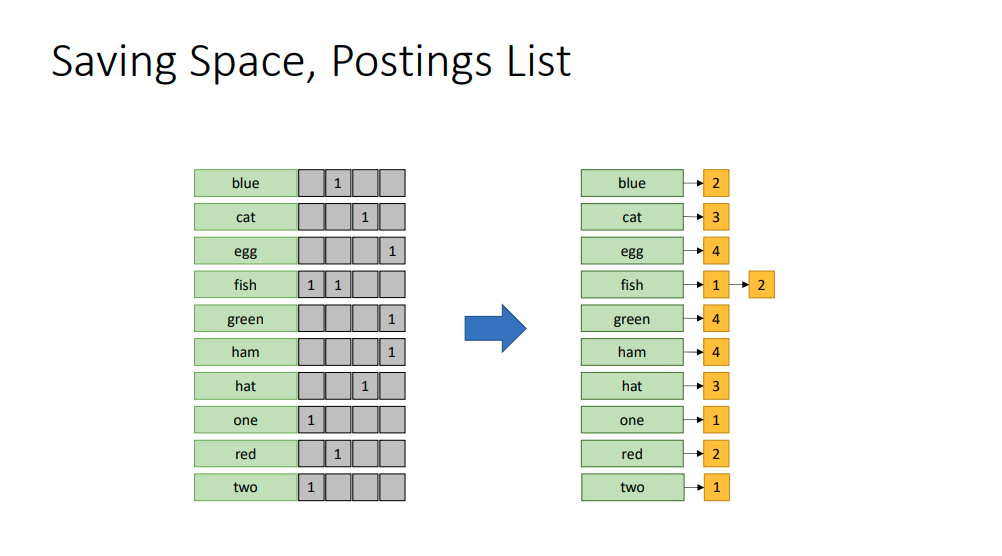

Postings size use Zipf’s Law:

- is the number of elements

- is the rank, anywhere between and

- is the characteristic exponent

Zipf’s Law: (also) linear in log-log space

- A few elements occur very frequently

- Many elements occur very infrequently

- Ohh, it’s like the 80-20 Rule - https://www.youtube.com/watch?v=fCn8zs912OE&t=253s&ab_channel=Vsauce

Instead of using that structure, use a linked list!!

Boolean Retrieval

Hits: Set of documents for which the query is true

Ranked retrieval is introduced.

- The relevance of a document is the sum of the wi value

Chapter 5: Graphs

How do we do mapreduce on graphs?

See

Data Mining: Part 1

A/B testing for ML: Step 1: Offline training and evaluation (holdout, cross-validate, etc) Step 2: A/B testing vs other methods

Data Mining: Part 2

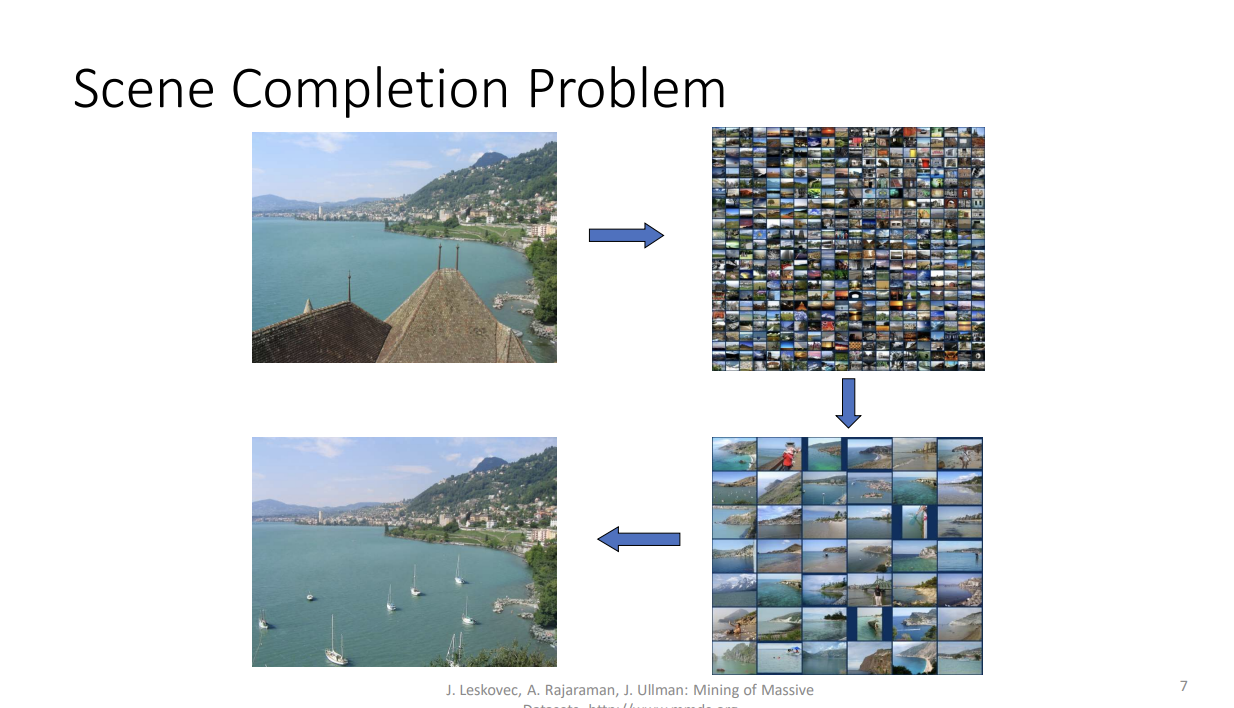

Problem:

- S – Set of Objects

- D(a,b) – Distance from object a to b

- t – maximum distance threshold

Goal: Find all unordered pairs (a,b) s.t. D(a,b) ≤ t

- Find the top 42 matching images, and then fill it in

Capturing similarity is difficult

- Images

- Protein

- Text

Given high-dimensional datapoints and some distance function : Find all pairs of datapoints s.t.

The naïve approach: just compute for all – not very big data Magic: – normal to want, and possible to achieve.

- Use LSH

Why do we care? The core technique applies to ML!

(Scalar) LSH to find Near Neighbours

- Make a Hash Table

- Use buckets that are wide (and overlapped, so items go in multiple buckets)

- Most values s.t. < c will have at least 1 bucket in common

- Most values > c will NOT share a common bucket

• Sets as vectors: Measure similarity by the cosine distance • Sets as sets: Measure similarity by the Jaccard distance • Sets as points: Measure similarity by Euclidean distance

Part 2 of data mining

Measuring distance between text documents: Remember, one goal is detecting duplicate news stories

- Diff: Too sensitive to rearrangement

- Word Count / Bag of Words: not sensitive enough to rearrangement

- Edit Distance: too difficult to compute

- doc2vec cosine distance: (too advanced)

- N-Grams: Just right

Jaccard How do n-grams give us a distance measure? Jaccard distance! This is used for sets.

Do we have sets? Yes: A document embedding is the set of n-grams it contains.

Here’s how we compute the similarity metric:

These are the distances

What

nto use?What n should I use?

There’s a few factors:

- Depends on if you’re doing byte-level or word-level n-grams

- Depends on what size of documents

For byte-level:

- 4-5 for short documents (tweets, sms, emails)

- 8-10 for long documents (webpages, books, papers)

For word-level:

- 2-3 for short documents

- 3-5 for long documents

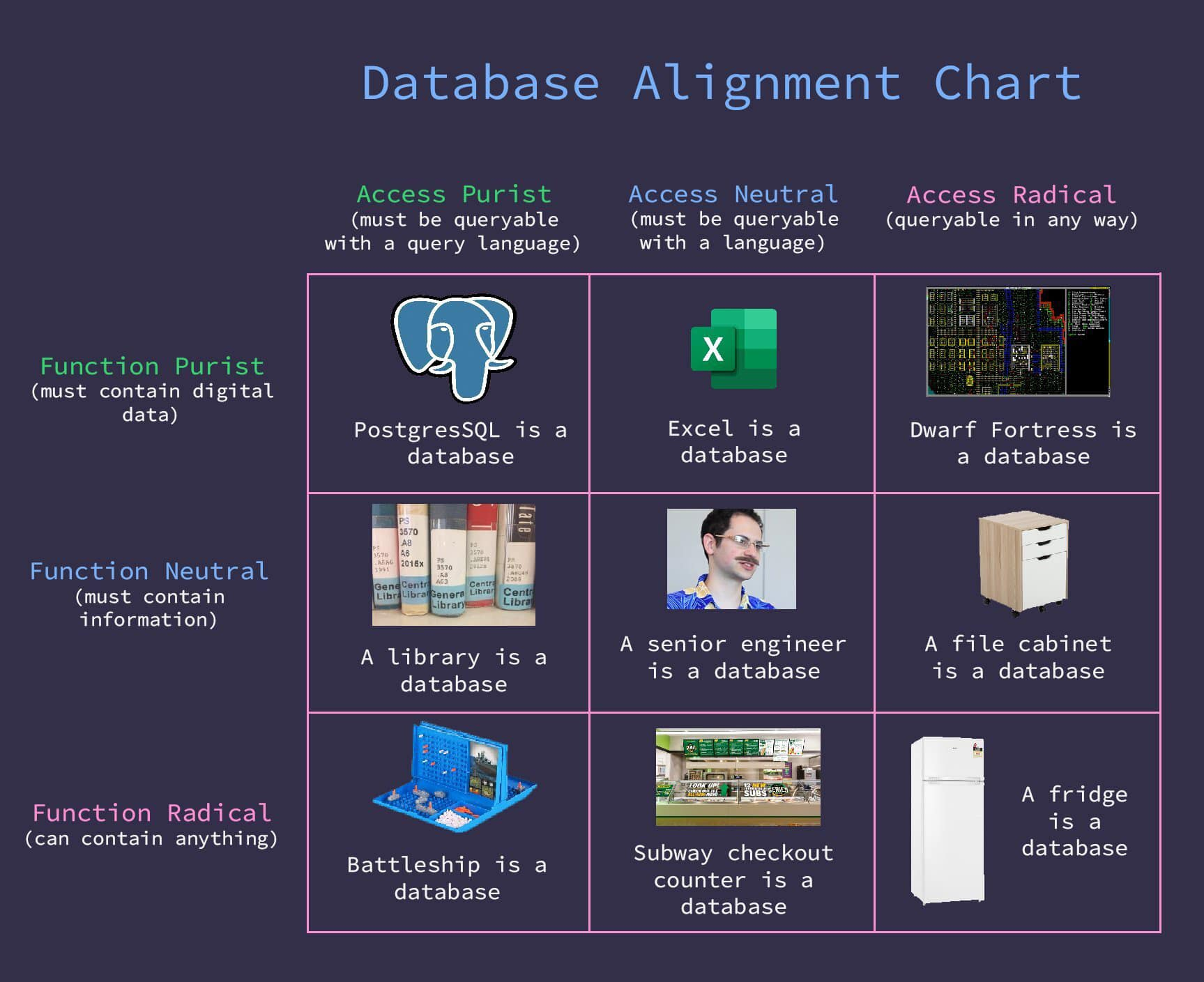

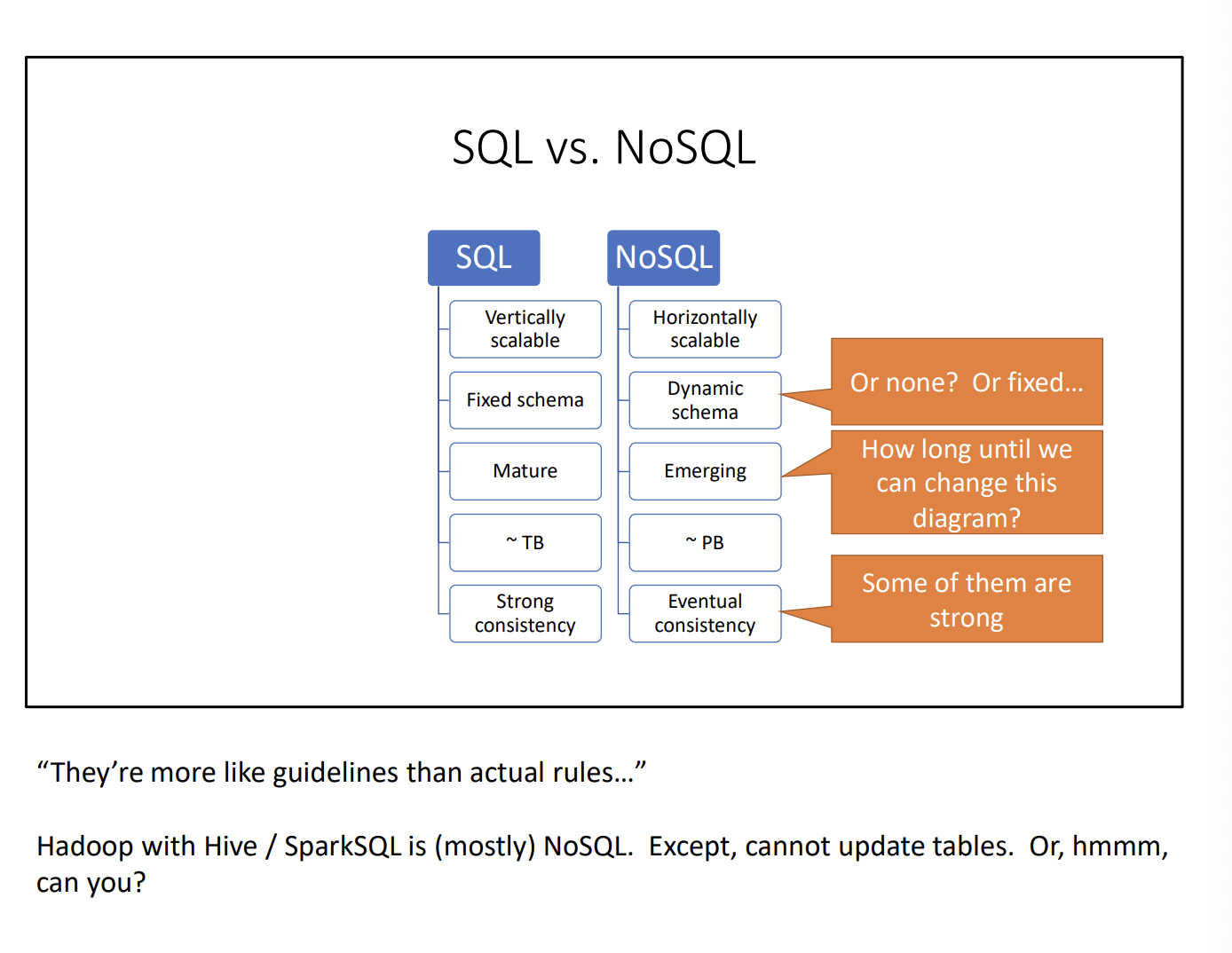

Chapter 7: Relational Data

- This is a cool diagram that the teacher showed

- https://www.reddit.com/r/ProgrammerHumor/comments/y3ykga/database_alignment_chart/

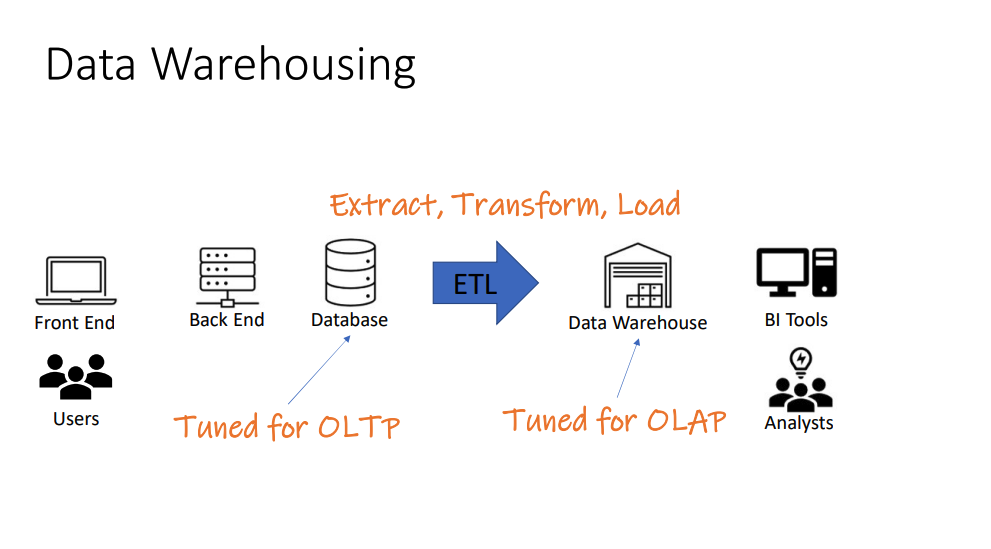

Database Workloads OLTP (Online Transaction Processing)

- Most Applications:

- E-Commerce, Banking, Reddit, etc.

- User Facing: Must be fast, low latency, concurrent (many users)

- Tasks: small set of common queries

- Access: Random reads, small writes

OLAP (Online Analytical Processing)

- BI and Data Mining

- Back-End: Batch workloads, low concurrency

- Tasks: Complex Analytics (Ad Hoc)

- Access: Full Table Scans, Big Data

Two patterns, one database

To satisfy both needs, we use a data warehouse

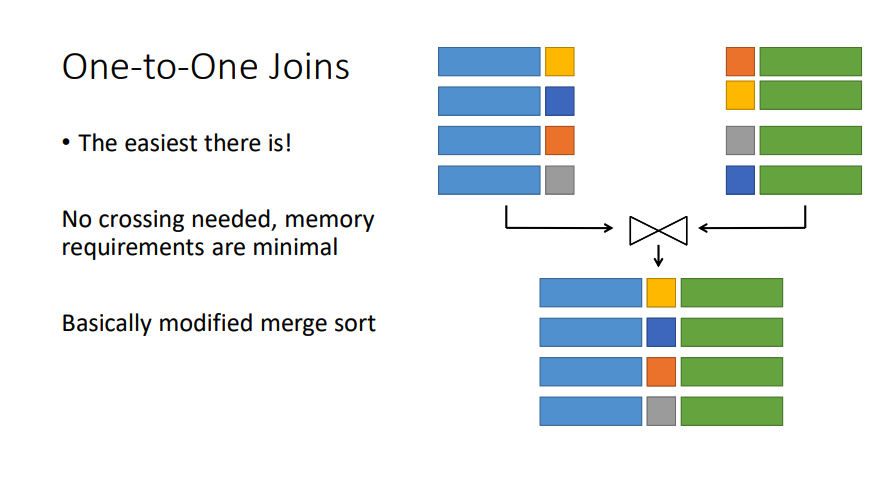

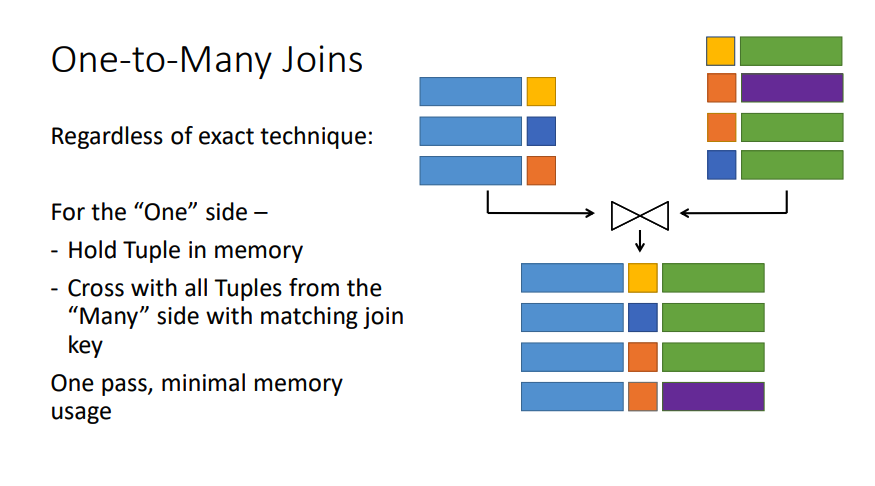

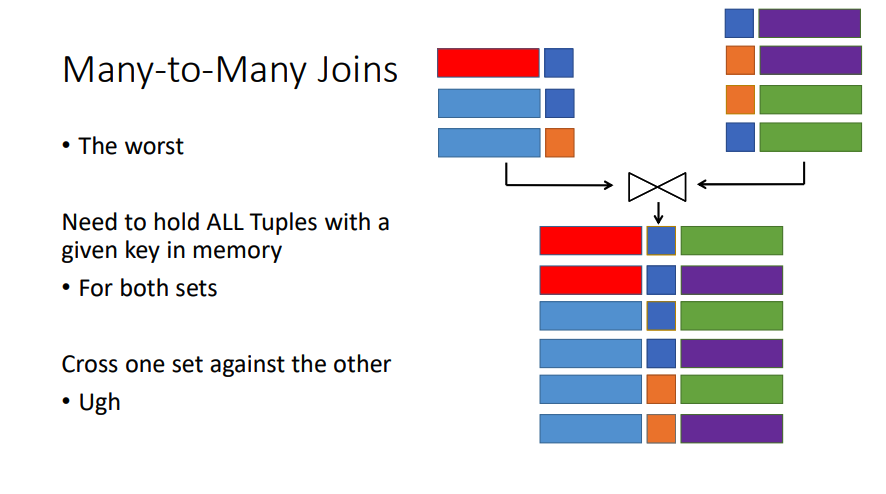

So how do we do all these operations in mapreduce?

- UNION → Hadoop MapReduce has a MultipleInputFile class

SUBSTRACT

- MultipleInputFiles again – Each mapper has:

- Key: An Entire Tuple, plus “Which Mapper Sent Me”

- Value: Not used

- Sort “RHS” tuples before equal “LHS” tuples

- Reducer

- Remember last RHS tuple

- Emit LHS tuples only if they do not equal the last RHS

CROSS PRODUCT

- NO, GOD NO

Inner joins

Chapter 10: Mutable State

https://student.cs.uwaterloo.ca/~cs451/slides/09%20-%20Mutable%20State%20-%20Combined.pdf

Chapter 9

Bloom Filter