Reinforcement Learning, Bellman Update

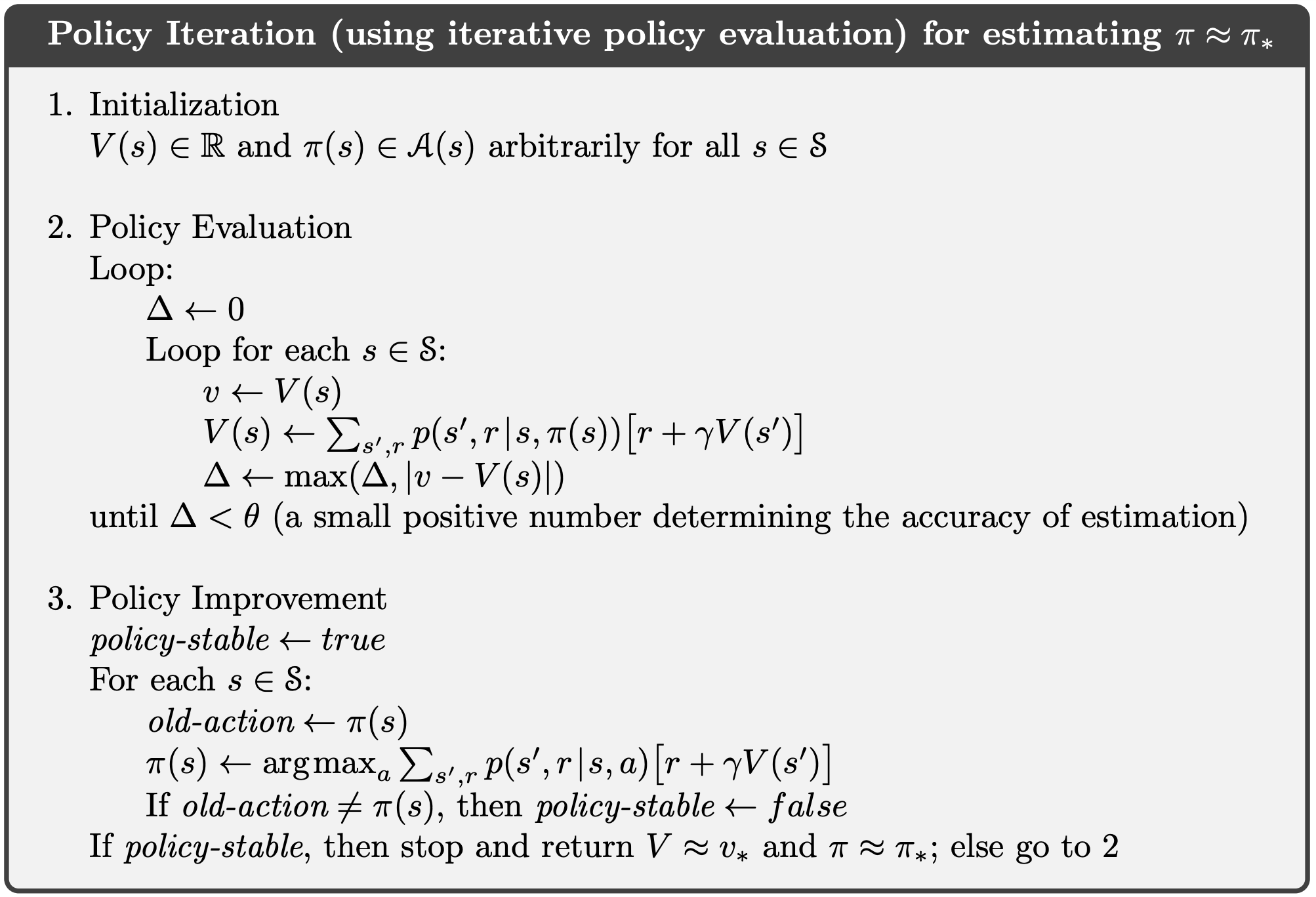

Policy Iteration

Intuition: build a policy, then build value function, and then build a better policy, and rinse and repeat until the optimal policy.

Policy iteration is the process of alternating between 2 things:

- Policy Evaluation With fixed current policy , find values with simplified Bellman expectation backups (iterate until values converge)

Other Notation from CS287

- Notice that the above is extremely similar, instead of using the in Value Iteration we can use to evaluate a particular policy.

- We are not taking the max because we don’t have a choice, the policy decides the action

- Policy Improvement With fixed utilities, find the best action according to one-step lookahead. You basically override the action for the very first action.

- Improve the policy by acting greedily with respect to (Policy Improvement)

How does acting epsilon greedy actually result in policy improvement??

Acting ε-greedy with respect to Q(s, a) leads to policy improvement because the greedy part exploits the best-known action, and the ε-randomness ensures exploration that lets us discover even better actions. Over time, this exploration refines Q(s, a), and thus, the greedy part improves too.

umm, but like wouldn’t that refine the Q negatively too?? Exploration can lead to bad actions. See proof below

Alternative notation from CS287: Repeat steps 1 and 2 until the policy converges, i.e.

Policy iteration always converges to the optimal policy . In terms of comparing with Value Iteration, this is a lot longer it seems, because we need to figure calculate the value function for a particular policy, and then come up with a new policy.

Note

The example below is done with action-value functions, whereas the textbook uses state-value functions. It’s essentially the same thing, but in Model-Free Control, we always use q-values, and model-free are where the more interesting problems are.

Proof (IMPORTANT)

We essentially act greedily with respect to the action-value functions, doing a Bellman Optimality Backup.

https://www.tuananhle.co.uk/notes/policy-improvement-theorem.html

This improves the value from any state over one step, It therefore improves the value function,