Cyrill Stachniss

Credits to Sachin. See his page for the actual courses that are recommended.

https://www.ipb.uni-bonn.de/photo12-2021/ https://www.ipb.uni-bonn.de/photo12-2021/#2

Lecture Notes

Note: I try to follow Drake Notation for the order of the transforms. So from Cyrill notation to drake notation:

The transform tells you how to go from to , but you think about it in terms of measuring frame in terms of .

Camera Basics and Propagation of Light

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-02-camera.pptx.pdf

Notes moved to Camera.

Local Operators Through Convolutions - Part 1: Smoothing

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-06-local-op-part1.pptx.pdf

I wasn’t going to go through this chapter. But then the teacher was going through the concepts of kernels and filter for the geometric transformations lecture, and I never heard about the box filter.

What is the difference between a filter and a kernel?

They are often used interchangeably.

How is an image actually smoothed?

- I thought it was just resampling

So how is this chapter relevant?

There are 3 types of operators

- Point operators

- Local operators

- Global operators

We can use these for noise reduction in an image.

Box Filter

See Box Filter.

Paddings

See Padding.

Notes on Convolution.

Geometric Transforms of Images

https://www.youtube.com/watch?v=QwU7iSJK8Rk&ab_channel=CyrillStachniss

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-08-geometric-trans.pptx.pdf

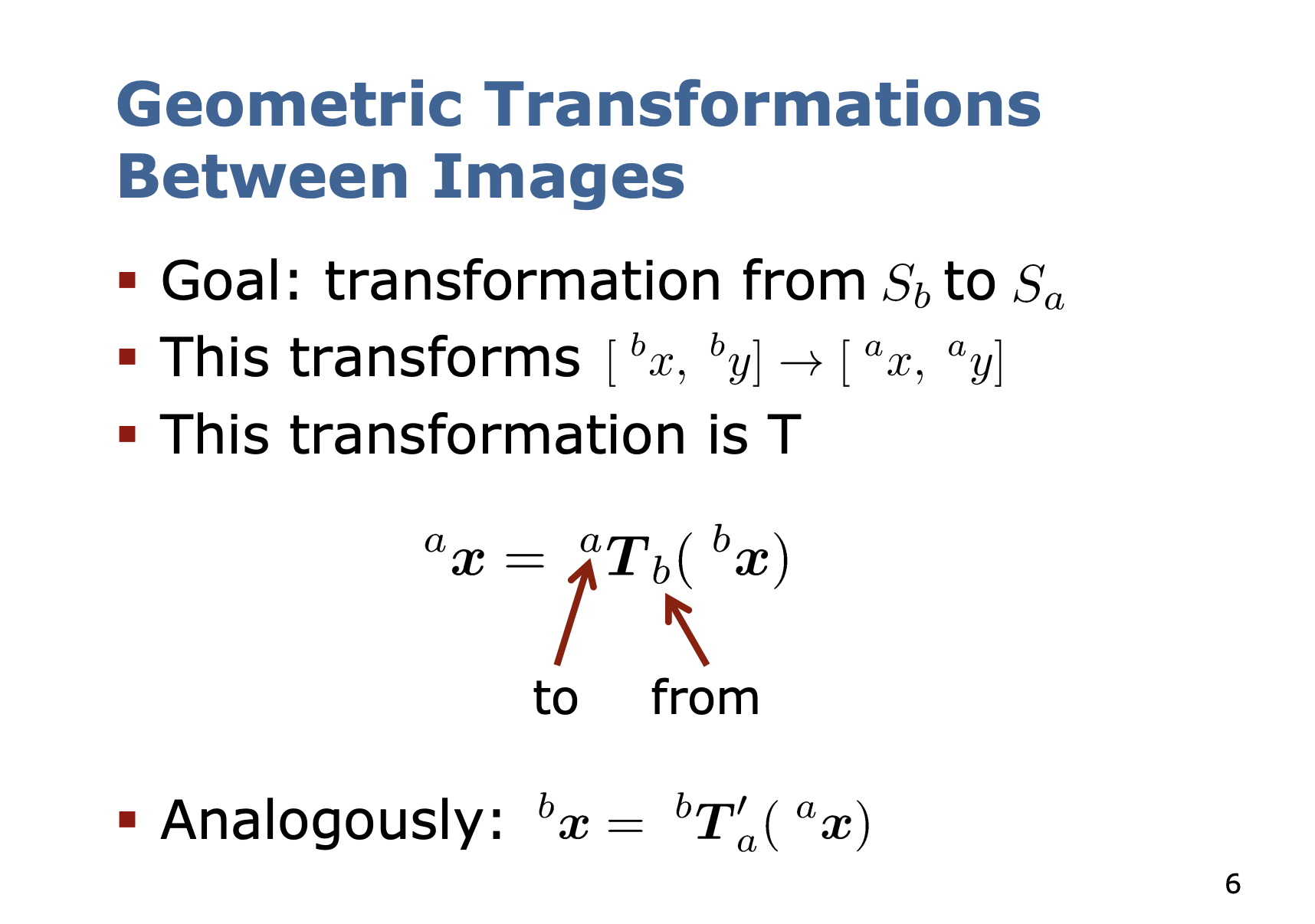

I already know my transforms, but it is just a matter of notation.

- from frame to frame

Though I don’t like this notation. Why is the “to” on the left..?

- So the confusion is that they say “from to ”, but if you actually look at the transformation itself, the values will be measured from to …

I will use the Drake Notation.

Generally, the transformed pixel coordinates are no longer integers. What should we do?

- My first instinct is to just round the number. But think about what is actually happening under the hood:

- Imagine you scale down an image. There are going to be multiple pixels intensities that map to the same location. Which pixel intensity do you pick?

The solution: resampling

Resampling

See Image Interpolation and Resampling.

Ways to do this:

Okay, but how do we map the values?

- I thought it was just multiplying each pixel by a certain value

- Think about correcting for distortions

Ahh, I think that the interpolation step happens with resampling.

- You have irregular points

- It needs to be clear what mapping direction there is

This is important for scale invariance. But the other option is to use an Image Pyramid.

Camera Parameters - Extrinsics and Intrinsics

Notes moved to Camera Calibration.

Visual Feature Part 1: Computing Keypoints

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-10-features-keypoints.pptx.pdf

Notes moved to Keypoint.

Visual Feature Part 2: Feature Descriptors

https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-11-features-descriptors.pptx.pdf

Math Basics Often Used In Photogrammetry

Slides: http://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-18-math-basics.pptx.pdf

TODO: This will help me understand why we use Eigenvalues

Direct Linear Transform for Camera Calibration and Localization

Slides: http://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-21-DLT.pptx.pdf

This is really fundamental. Notes in Direct Linear Transform.

Least Squares - An Informal Introduction (Cyrill Stachniss)

This is really useful for a handful of problems for state estimation

Graph-Based SLAM is the least-squares approach to SLAM

https://www.youtube.com/watch?v=r2cyMQ5NB1o&ab_channel=CyrillStachniss

slides: file:///Users/stevengong/Downloads/pdf-2021/sse2-03-least-squares.pptx.pdf

Didn’t finish, focusing on camera calibration part

Camera Calibration using Zhang’s Method (Cyrill Stachniss)

slides: http://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-22-Zhang-calibration.pptx.pdf

Notes in Zhang’s Method.

Projective 3-Point Algorithm

This is how you actually infer the position of the camera.

I want to glance at this to understand the implementation of this at an architecture level.

Slides: http://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho1-23-p3p.pptx.pdf

Notes in Projective 3-Point Algorithm.

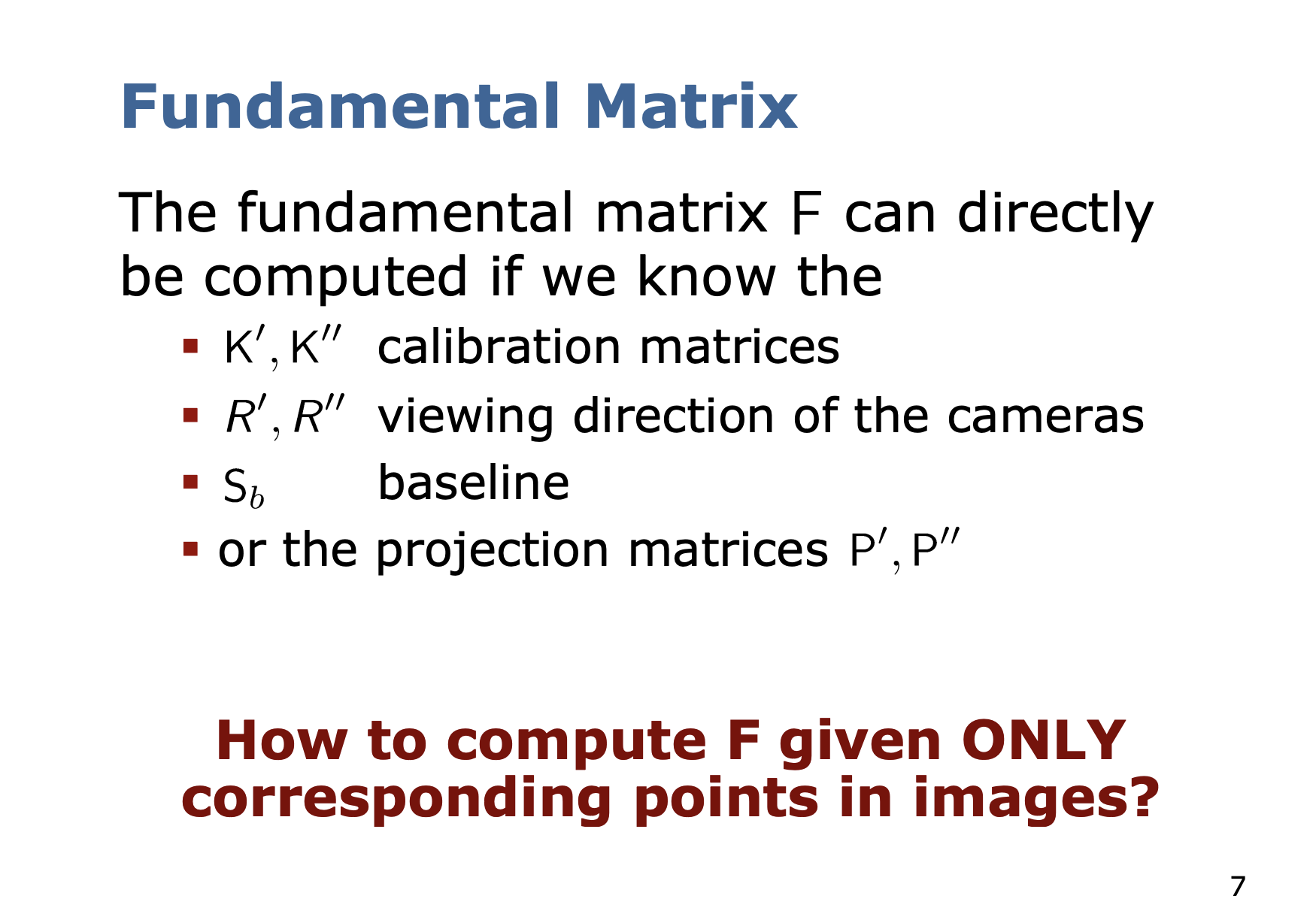

Relative Orientation, Fundamental and Essential Matrix (Cyrill Stachniss)

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho2-02-fundamentalmatrix.pptx.pdf

Notes in Relative Orientation, Essential Matrix and Fundamental Matrix.

Epipolar Geometry Basics

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho2-03-epipolar-geometry.pptx.pdf

See Epipolar Geometry.

Direct Solution

Slides: https://www.ipb.uni-bonn.de/html/teaching/photo12-2021/2021-pho2-04-fe-direct.pptx.pdf

i think you need to review DLT before going into this lecture.

Triangulation for Image Pairs

See Triangulation.

The Basics about Bundle Adjustment

Notes in Bundle Adjustment.